Introducing Apple Intelligence on Apple Platforms

The annual Worldwide Developers Conference (WWDC) by Apple never fails to impress, and this year is no exception. Among the numerous innovations, the advancements in machine learning (ML) on Apple platforms are truly groundbreaking. Let's delve into some of the key highlights announced at WWDC 2024, showcasing how Apple is integrating machine learning to enhance user experiences across its ecosystem.

Machine Learning on Apple Platforms

Machine learning is already deeply embedded in various Apple applications such as Photos, Apple Watch's ECG feature, and Siri. These implementations provide users with smarter, more intuitive interactions. At WWDC 2024, Apple revealed even more exciting ways machine learning is being harnessed to make our devices smarter and more capable.

Apple Intelligence: Elevating Writing and Text Processing

Apple introduced new AI-powered writing tools designed to assist in creating, rewriting, and refining text. These tools are seamlessly integrated into Apple's ecosystem, offering proofreading and summarization capabilities. One of the standout features is that all text processing is conducted on-device, ensuring user privacy and data security. Moreover, the new Text and Web View integration in UIKit and SwiftUI makes it easier for developers to incorporate these advanced text processing features into their apps.

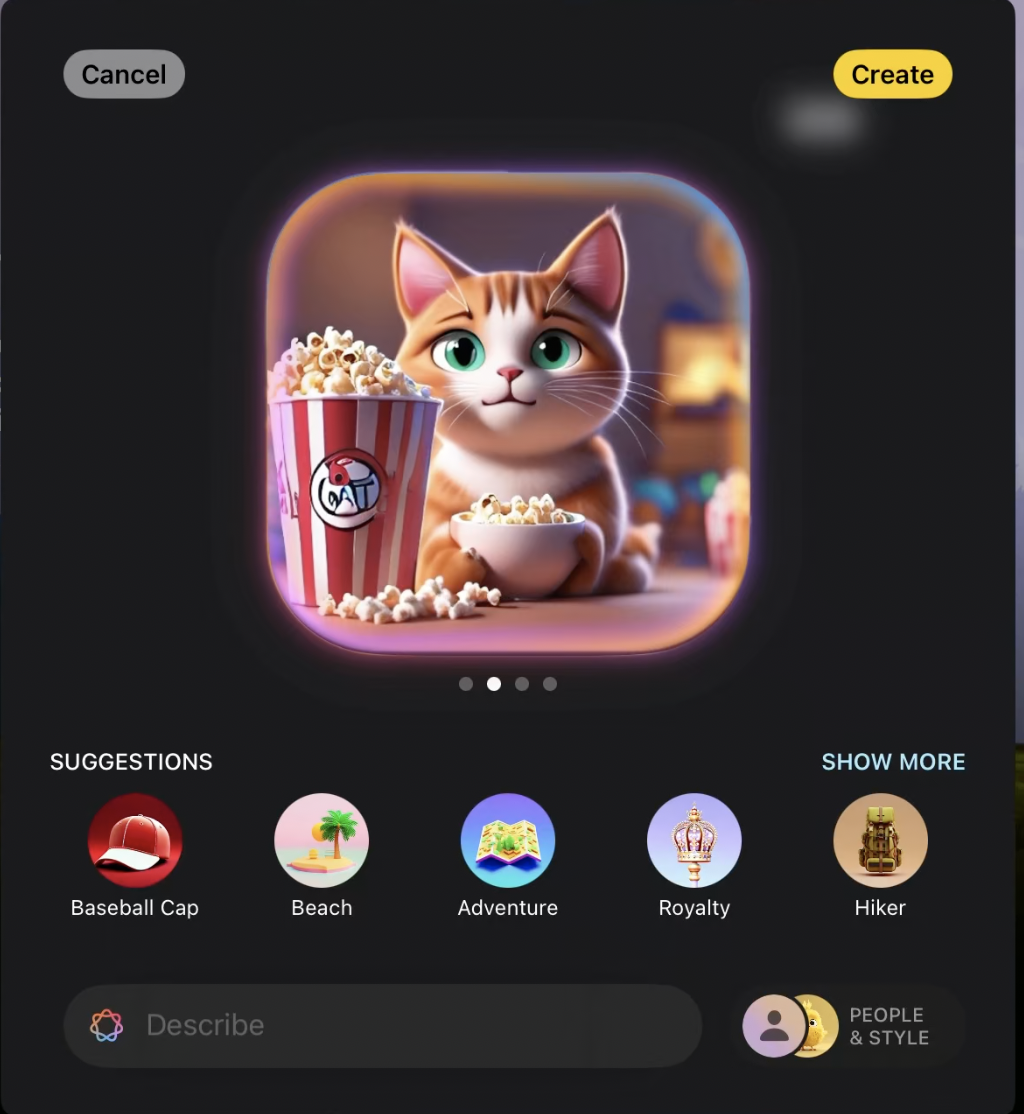

Image Playground: Creative Freedom with On-Device Intelligence

One of the most exciting announcements is the Image Playground, a new feature that allows users to create images using Apple Intelligence directly on their devices. This tool can be integrated into applications with just a few lines of code, eliminating the need for developers to train their own models or design safety guardrails. The Image Playground leverages the Neural Engine in the M1, M2, M3, and M4 chip families, enabling users to generate unlimited images locally. This not only enhances creative freedom but also ensures faster and more efficient image processing without relying on cloud-based solutions.

Siri: Smarter, More Natural, and More Personal

Siri has received significant upgrades, making it sound more natural, contextually relevant, and personal. The revamped Siri is now more flexible and offers enhanced functionality across key applications using the Intents framework. These improvements ensure that Siri can understand and respond to a wider range of commands more accurately, making it an even more indispensable assistant.

The Vision Framework: Expanding Visual Intelligence

The Vision framework has been expanded to provide a broader range of visual intelligence capabilities. These include text recognition, facial detection, and body pose recognition, among others. The enhanced framework allows developers to build more sophisticated and responsive applications that can interpret and interact with the visual world more effectively.

New Framework for Language Translation

Apple also introduced a new framework for language translation that performs direct language-to-language translation locally on the device. This framework features a simple UI, a flexible API, and efficient batching, making it easier for developers to incorporate real-time translation capabilities into their applications. The on-device processing ensures that translations are quick and private, catering to users' needs for speed and security.

CreateML: Empowering Developers with Custom Models

CreateML, Apple's machine learning model training tool, has seen significant enhancements. It now allows developers to create and train their own CoreML models for various Apple devices. This includes image, sound, text, hand pose, and action classification. CreateML Components enable training within applications on all major Apple platforms, including watchOS, tvOS, iOS, iPadOS, macOS, and visionOS.

CreateML has also introduced a new template for object tracking and data source exploration, simplifying the process of inspecting and annotating data before training. Additionally, new time series models for classification and forecasting are now available, providing developers with powerful tools to build more predictive and responsive applications.

Running Models on Device: Supporting Diverse Models

Apple's commitment to on-device processing is further highlighted by its support for running large diffusion models and large language models. This includes popular models from the open-source community such as Whisper, Stable Diffusion, Mistral, Llama, Falcon, CLIP, Qwen, and OpenELM. This flexibility allows developers to leverage state-of-the-art models without compromising on privacy or performance.

Conclusion

WWDC 2024 has truly showcased Apple's dedication to advancing machine learning and artificial intelligence on its platforms. From enhanced text processing and image creation tools to significant improvements in Siri and new frameworks for visual intelligence and language translation, Apple is empowering developers to build smarter, more intuitive, and more capable applications. With the new capabilities in CreateML and the support for running diverse models on-device, developers have more tools than ever to innovate and push the boundaries of what's possible on Apple platforms.